Artificial Intelligence | June 17, 2023

Despite the focus of artificial intelligence being on ChatGPT, Google Bard, and other alternatives that focus on text and images, Meta has just introduced MusicGen, its AI for creating and mixing music. It is completely free and is now available to users.

MusicGen is an artificial intelligence model capable of generating music based on a text description, with the option to use an external audio track to guide the creation process. It is based on the Transformer comprehension model created by Google.

This AI has been trained on over 20,000 hours of licensed music, including 10,000 high-quality tracks, as well as music catalogs from Shutterstock and Pond5. It could be a significant rival to MusicLM, the alternative officially launched by Google last May.

MusicGen is Meta’s new generative AI designed to create music based on user-provided text. The text description can be supplemented with an audio file from which the melody can be extracted and integrated into the creation.

However, the audio generated cannot be precisely controlled in terms of its orientation. For the same description, there can be multiple different results, as the AI carries out the complete generation process each time it is requested.

It’s worth noting that this AI can only create a 12-second snippet of a song. This means that, for now, it is not possible to create complete songs, only small fragments. Nevertheless, this AI holds great potential.

Three versions of this model have been tested, with the basic version having 300 million parameters, while the others have 1.5 billion and 3.3 billion parameters, respectively. Theoretically, larger models offer higher composition quality, although the intermediate level was rated as providing the best results, as reported by The Decoder.

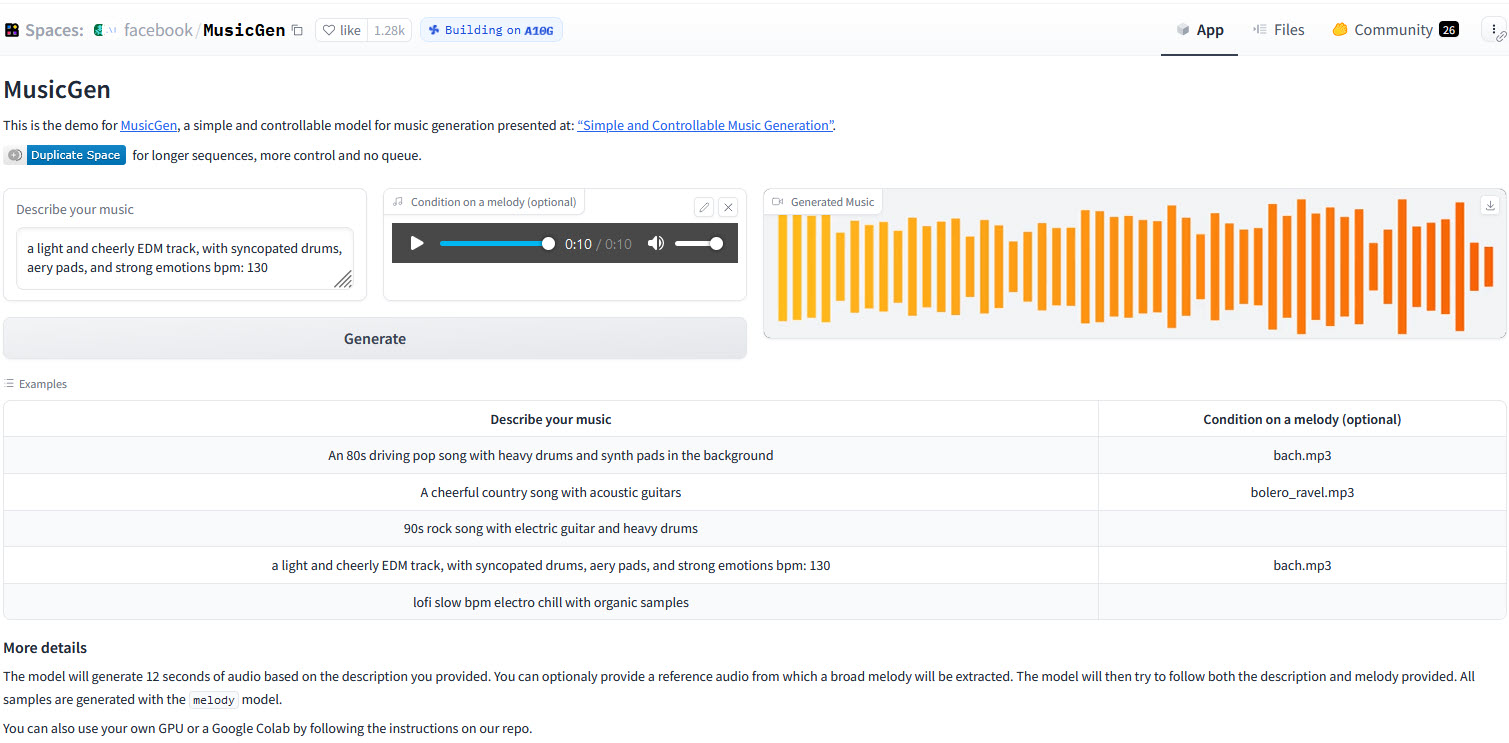

Meta’s AI has been released as open-source and can be downloaded from GitHub. There is also a demo available on the Hugging Face project portal. To try it out, simply go to the portal, write a description in English, and click on the Generate button. The AI’s behavior can also be conditioned by uploading an audio file from which the melody can be extracted.

The estimated duration for the creation to appear varies depending on the number of people simultaneously using the AI demo, but it can take more than 5 minutes. Keep in mind that the way the melody is used cannot be controlled, but you can generate it multiple times to alter the result.

In the tests we have carried out, many requests have ended with the AI displaying an error notice due to the connection. On other occasions, after the allotted time, we have been able to listen to the generated 12 seconds, and it must be said that the results are unpredictable but very well achieved.

At the bottom of the page, Meta provides a series of examples to help craft the descriptions. Clicking on the name of the audio files adds them to the file box for use as examples.