Artificial Intelligence | May 23, 2023

When Facebook, Inc. rebranded as Meta Platforms, Inc. in October 2021, unless he had the extraordinary ability to foresee the future, Mark Zuckerberg possibly didn’t imagine that his ambitious bet on the metaverse would encounter a monumental obstacle approximately a year later.

In November 2022, OpenAI introduced ChatGPT, and within days, nearly everyone was talking about the conversational chatbot’s capabilities. For many, this move marked the starting point of a race to lead in the field of artificial intelligence that shook the technology industry profoundly.

Some companies were in a more favorable position than others. Microsoft, undoubtedly, was one of them. The folks at Redmond had invested $1 billion in the Sam Altman-led company in 2019, and upon seeing what was happening, they opened their checkbook again, this time for $10 billion.

In the midst of it all, Meta continued to burn through a fortune in the metaverse, a long-term idea that presented numerous challenges. To achieve the desired results, significant advancements in the fields of virtual and augmented reality were necessary. A solid business model had to be developed. And, lastly, it would take years to become profitable.

The opportunities, seemingly, lay in the realm of artificial intelligence but at the highest level. It’s not that the social media company lacked experience in this sector; for instance, their content recommendation systems and advertising platform are primarily supported by advanced algorithms.

However, their ability to demonstrate significant advancements in developing next-generation language models lagged behind. According to documents seen by Reuters, the company’s infrastructure required substantial changes to catch up, while their in-house AI chip deployment in production did not fully materialize.

The definitive shift reportedly took place in late summer 2022, but it is now that we are starting to see the results. While Meta maintains its commitment to the metaverse, it clearly demonstrates a strong focus on AI, with projects involving generative algorithms and beyond.

On Thursday, Zuckerberg unveiled four innovations aimed at “powering new AI experiences” at Meta. He revealed the upgrade of their existing AI data center, the Research SuperCluster, a new proprietary chip design, the design of a new data center, and a programming assistant. Let’s focus on the first three.

In January of last year, we learned that Meta had been developing an AI data center for over a year, aiming to become one of the most powerful in its category. Like many similar projects, the construction of the so-called AI Research SuperCluster (SRC) was planned gradually and in stages.

The second phase of the SRC, initially scheduled to be operational by mid-2022, has just been completed. Meta has made some adjustments to its design to strive for nearly 5 ExaFLOPS of computing power when fully operational. All of this has been made possible thanks to a robust and expensive hardware infrastructure developed by NVIDIA.

In this ambitious data center of the Menlo Park company, we find 2,000 NVIDIA DGX A100 systems incorporating 16,000 powerful NVIDIA A100 graphics processing units launched in 2020. All of this is supported by the high-performance NVIDIA Quantum InfiniBand interconnect system with a bandwidth of 16 Tb/s.

As mentioned, the SRC has been operational for some time and has been utilized by the company for various research projects. One of them is LLaMA, a large language model that was publicly announced earlier this year. It can be seen as a competitor to OpenAI’s generative systems like GPT.

With the recent update, it is expected that this data center will play a significant role in Meta’s future endeavors. The company states that it will continue to use it for training language models and exploring other areas of generative AI. Additionally, Meta emphasizes that the data center will be instrumental in the development of the metaverse.

As mentioned, Meta’s current operational AI infrastructure is based on NVIDIA, a company that has emerged as one of the major winners in the race in this field. Following in the footsteps of Google, Meta decided to start developing its own high-performance chip for AI data centers with a very specific focus.

GPU-based (Graphics Processing Unit) solutions are often the right choice for data centers due to their ability to handle multiple threads of work simultaneously, among other features, of course. However, Meta has come to the conclusion, as stated in a blog post, that GPUs are not suitable for all areas.

While GPUs play a crucial role in data centers dedicated to training AI models, according to the social media company, they are not as efficient in the inference process. To provide some context, inference is the second phase of the machine learning process that occurs after training.

During training, as mentioned before, the model learns from data, and its parameters are adjusted to provide responses in a time-consuming and computationally intensive process. In inference, the learned knowledge is put into practice to generate responses but with a fraction of the computing power used during training.

Based on this premise, Meta changed its approach. Instead of using GPU-based systems for the inference process, it opted to use CPUs (Central Processing Units). This presented an opportunity to develop its own family of chips called Meta Training and Inference Accelerator (MTIA) specifically designed for inference.

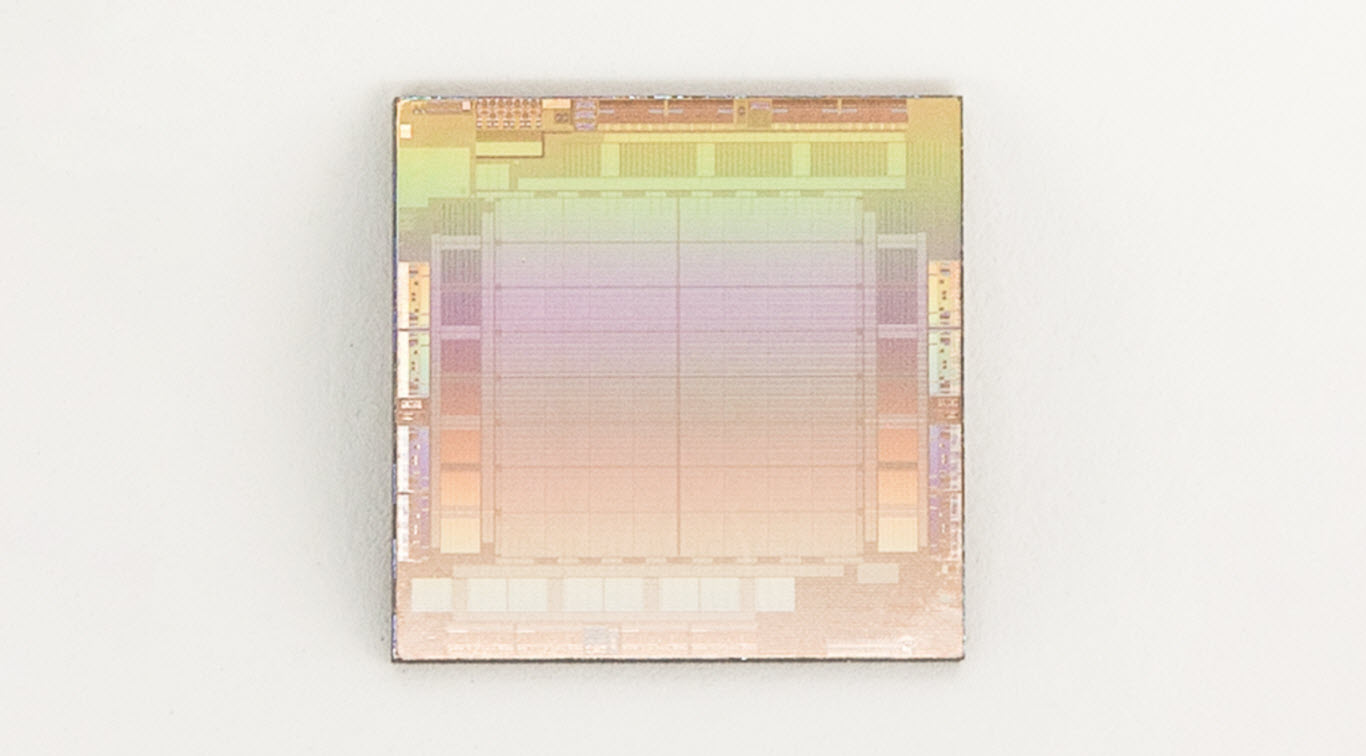

While this project had its origins in 2020, it is now that the company has decided to publicly discuss it. And this comes with some interesting technical details. We are looking at chips manufactured using TSMC’s 7-nanometer photolithography process, with a TDP of 25W. It is designed to support up to 128GB of memory.

Each CPU is mounted on M.2 boards that are connected through PCIe Gen4 x8 slots. It is important to note that data centers have multiple of these chips working in unison to deliver high levels of computing power. These features, mentioned in broad terms, are not definitive and continue to evolve.

We are unsure of the extent to which these chips developed by Meta and manufactured by TSMC will come into play, but the next point can serve as a guide. The company is already working on its next-generation data centers, which will complement the work of the SRC. The heart of these data centers will be the MTIA chips.

Meta claims that having complete control over the physical and software components of their upcoming data centers results in an “end-to-end experience” that will significantly enhance their data center capabilities, although no specific dates are mentioned. However, let’s remember that we are in the midst of a race.