Artificial Intelligence | May 22, 2023

Despite its remarkable virtues, a new study has identified 6 security implications associated with the use of ChatGPT.

ChatGPT, the groundbreaking tool in the field of natural language processing, is not without risks and challenges. Despite its many advantages, there are significant concerns that need to be addressed to ensure responsible and ethical use of this technology.

Just yesterday, it was reported in another article that Sam Altman, the creator of ChatGPT, addressed the United States Congress, warning about the potential dangers of uncontrolled artificial intelligence and the immediate need for regulation.

From the generation of deceptive content and the presence of biases to the risks of abuse and inappropriate behavior, it is essential to address these challenges promptly and ethically in order to maximize the benefits of this tool and minimize its potential negative consequences.

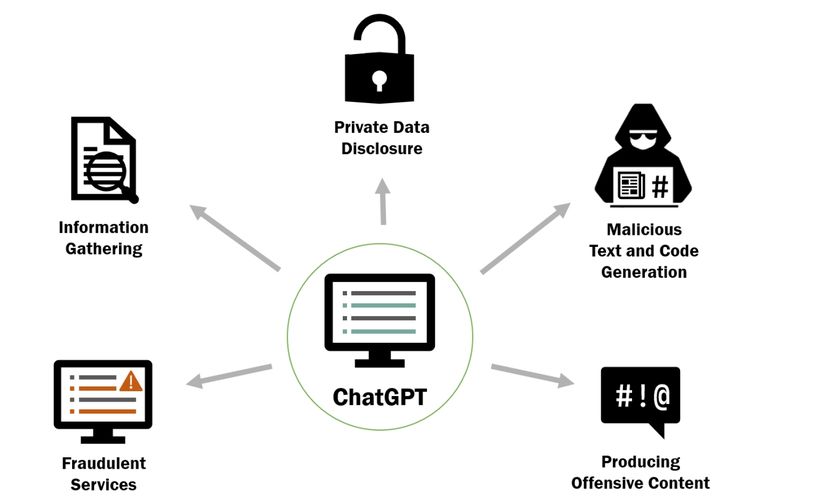

Despite this, a recent study has identified 6 major security implications associated with the use of ChatGPT. These include the generation of fraudulent services, collection of harmful information, disclosure of private data, generation of malicious text, production of malicious code, and the generation of offensive content.

The use of ChatGPT raises concerns regarding information collection. While ChatGPT itself does not collect personally identifiable information, there is a possibility that users may share confidential or sensitive information during their interactions with the chatbot.

For example, if a user provides personal details such as names, addresses, phone numbers, or other private information, there is a risk that this information may be stored and used improperly. If the data is stored or logged without proper consent, there could be privacy and security risks, especially if they fall into the wrong hands or are used for malicious purposes.

As explained in ZDNet, the ChatGPT disruption on March 20, which allowed some users to see chat history titles of other users, is a real example of current concerns.

“This could be used to aid in the first step of a cyber attack when the attacker is gathering information about the target to find where and how to attack most effectively,” says the study.

ChatGPT could be used to generate content that promotes fraudulent services, such as fake investment offers, pyramid schemes, or deceptive sales. This could lead people to lose money or disclose sensitive personal information.

It’s important to mention its use in providing false or misleading legal or financial advice, which could lead people to make incorrect or illegal decisions.

On the other hand, cybercriminals could use ChatGPT to create content that appears legitimate, such as phishing emails or deceptive messages. This could increase the risk of people falling victim to scams or cyber attacks.

If used improperly, it could generate illegal content such as guides for illegal activities, incitement to violence, or child pornography.

The generation of malicious code is another concern. ChatGPT could be used to generate viruses, trojans, or other types of malware. This could lead to attacks and compromise the security of computer systems.

It could also be used to discover and exploit vulnerabilities in computer systems or applications. This could allow attackers to take control of systems or access confidential information.

Lastly, it can be used to generate malicious code in a way that is harder to detect by security systems and antivirus software.

While the model has the potential to generate useful and valuable content, there is also the possibility of it being used inappropriately or irresponsibly, as has been demonstrated in various instances in Computer Hoy.

For example, if ChatGPT is trained on data that contains biases or prejudices, the model may generate content that is offensive, discriminatory, or harmful towards certain groups of people. It could also be used to generate fake content or misinformation, contributing to the spread of misleading information or conspiracy theories.

If users interact with ChatGPT without considering the confidentiality of the information they share, they could unintentionally reveal sensitive private data. The same applies if you ask it to generate documents, such as reports or transcripts, that contain sensitive private data without proper knowledge or consent.

Ultimately, considering all of this, if the systems implementing ChatGPT do not have adequate security measures or experience an attack, this data could be exposed to breaches.